Are Your Morals the Same as a Self-Driving Car’s? MIT Wants to Know

Photo via Mayor’s Office

A self-driving car experiences brake failure as it approaches a crosswalk. If it continues straight, it will collide with a concrete barricade, killing the female doctor and cat riding inside. If the car veers left to avoid the barricade, it will kill the two elderly women and elderly man using the crosswalk. How should the self-driving car decide?

Or how about this: If the car crashes into the barricade, it will kill three female athletes and one male athlete. But if it swerves, it will kill two large women, one regular-sized woman, and a man, all of whom were jaywalking. Then what?

These ethical dilemmas and countless others await you in the “Moral Machine,” a platform created by Scalable Corporation for MIT Media Lab to gather a human perspective on the moral, at times macabre, decisions facing driverless cars in emergency situations. Users are asked to answer 13 questions, each with just two options, and asked to pick the lesser of two evils.

“The sooner driverless cars are adopted, the more lives will be saved,” wrote the Morality Machine’s creators, in a New York Times op-ed. But taking seriously the psychological as well as technological challenges of autonomous vehicles will be necessary in freeing us from the tedious, wasteful and dangerous system of driving that we have put up with for more than a century.

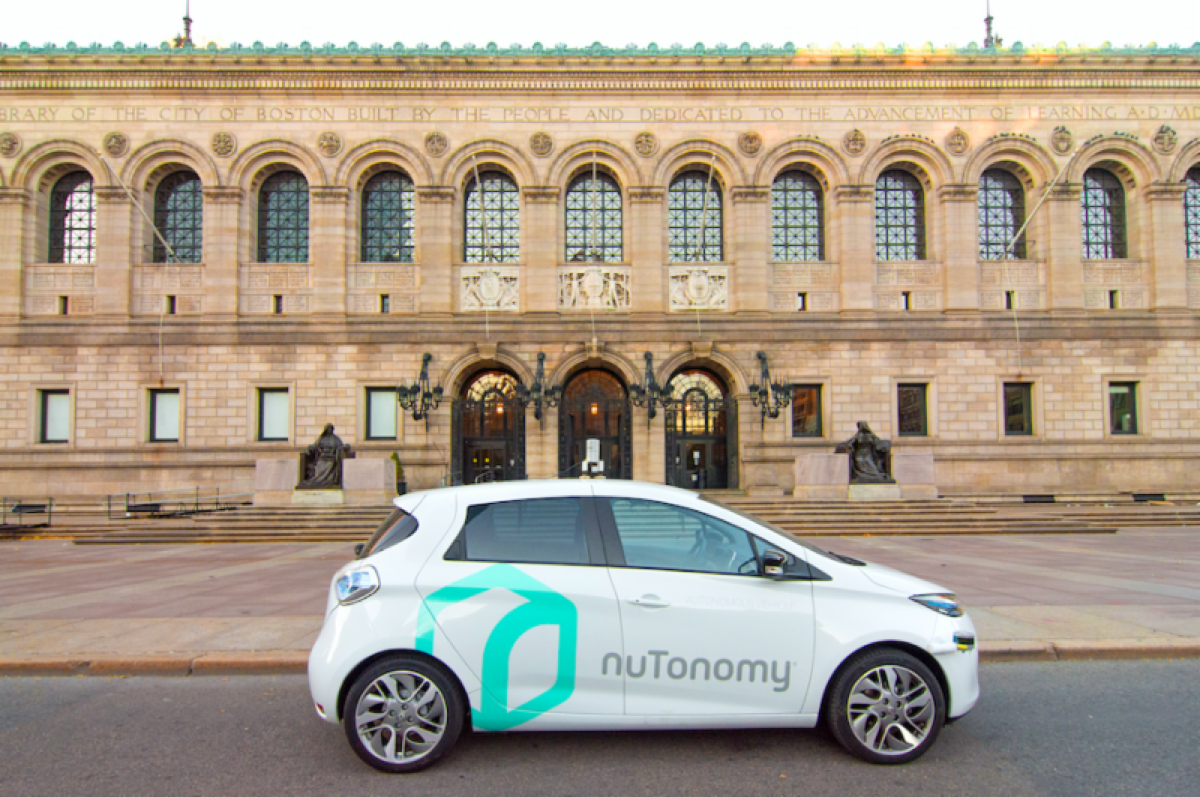

Self-driving cars hit the streets of Boston for the first time Tuesday, after Mayor Marty Walsh cleared the way for Cambridge-based NuTonomy to test their fleet at the Raymond L. Flynn Marine Park in the Seaport. A driver will remain inside the car just in case, and testing will be limited to daylight hours.